Data centers in Ashburn, Virginia.

Fast, Flexible Solutions for Data Centers

There are increasing opportunities emerging from data centers that can pave the way for electricity reliability and affordability for all.

Key Insights

- Data center operators are ready to invest in efficient, flexible, and low-cost energy sources that can mitigate stranded asset risks for utilities and keep ratepayer costs in check.

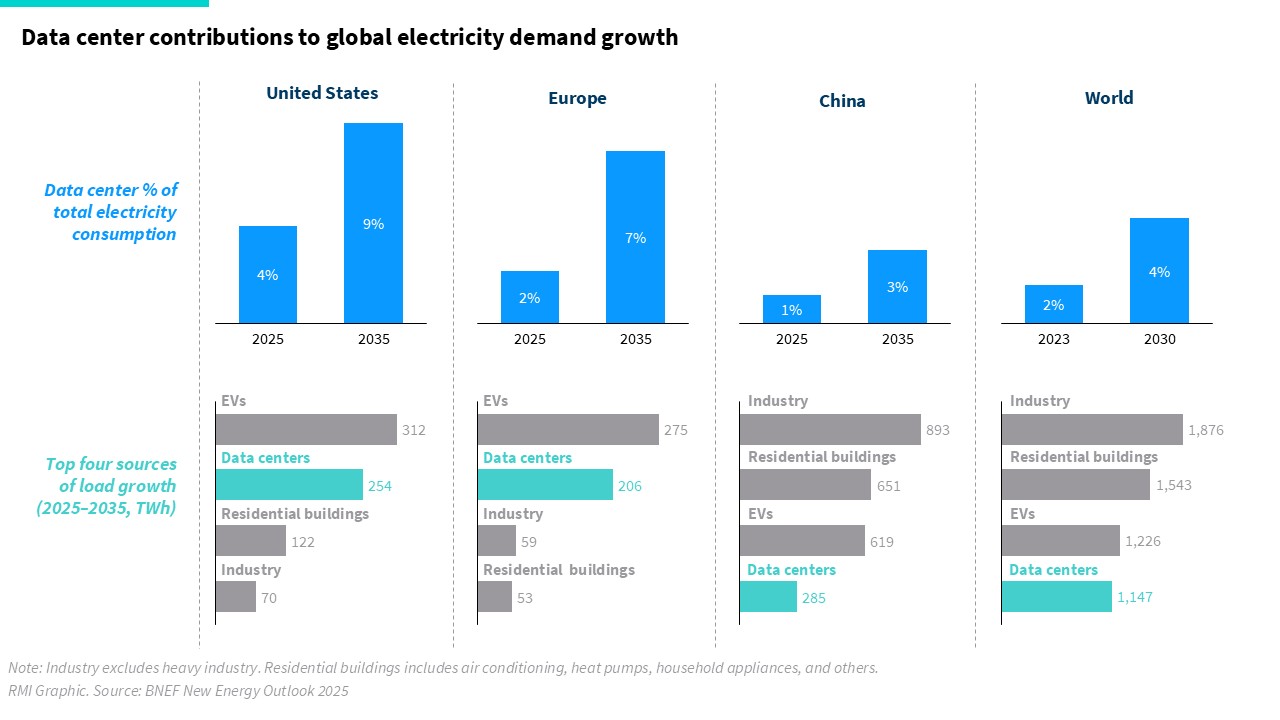

- Data centers use just 2 percent of global electricity today — and may account for approximately 10 percent of projected electricity demand growth between 2024 and 2030.

- Many utilities have a track record of over-forecasting demand, spending billions of dollars building power plants for load that did not materialize.

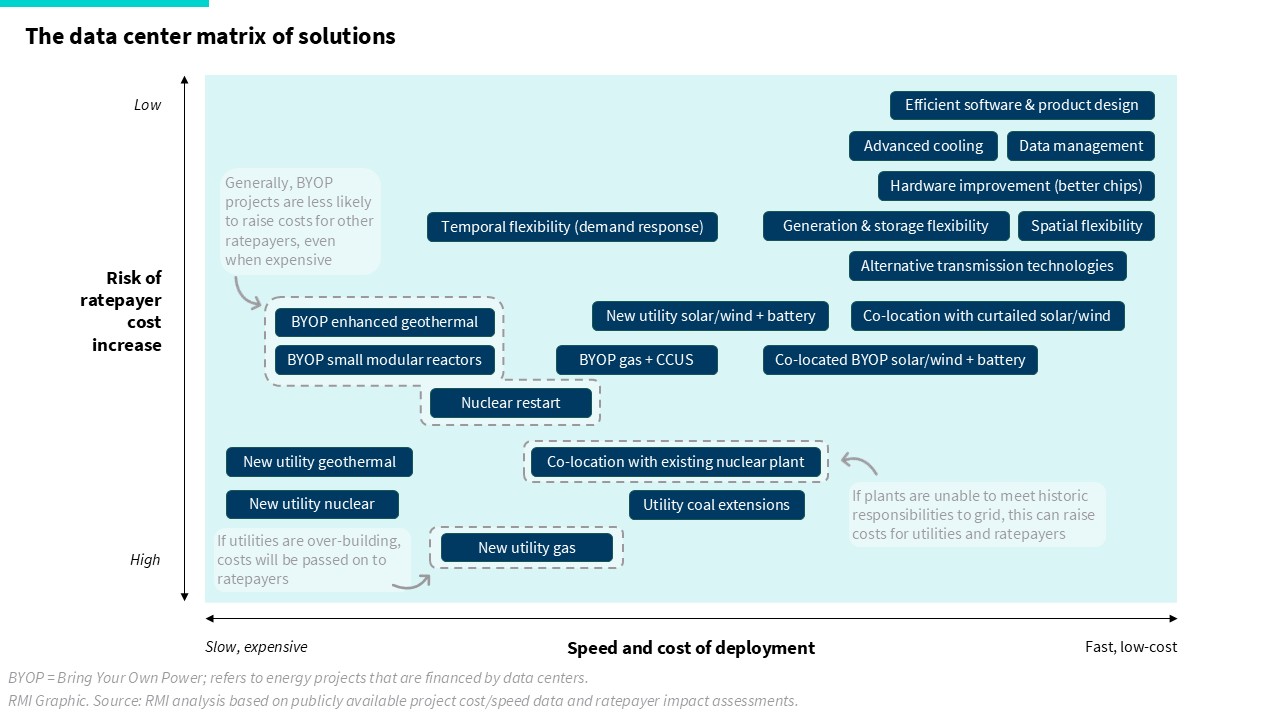

- We mapped a range of energy solutions for data centers that can temper the risks of over-building and high rate-payer costs.

Before you panic: data centers use just 2 percent of global electricity today

Data centers are large commercial facilities that house servers and other equipment that are used to provide digital services such as email, streaming, real-time navigation, running and developing software, cloud storage, and, increasingly, artificial intelligence (AI) applications for individuals, businesses, and government agencies of all sizes. Data centers require large amounts of electricity to run their equipment while keeping them cool.

Recently, the rapid growth of the AI and traditional cloud forecasts has been causing panic around electricity demand. Media headlines describe the situation as explosive and out of control, causing countries to eventually run out of power.

However, data centers account for just 2 percent of global electricity demand today — and will account for approximately 10 percent of projected electricity demand growth between 2024 and 2030. Globally, data center load growth will be less than other sources of load growth, such as industrial operations, electric vehicles, household appliances, and space cooling.

It’s also worth remembering how power panics in the past have played out. In 1999, Forbes ran an article declaring that half of the electric grid would be powering the digital economy within the next decade. In 2017, the World Economic Forum warned that Bitcoin would consume more power than the entire world by 2020. But neither of these happened. Globally, the share of electricity going to data centers has remained under 2 percent, and cryptocurrency today consumes just 0.5 percent of global electricity.

Exhibit 1

The data center challenge is best understood at the local level

One potential challenge is the tendency for data centers to concentrate in specific regions due to preferences for fast connectivity and cheap power, land, and water. The shift to hyperscale data centers — which can be as large as 1–2 gigawatts (GW), more than 100 times the size of traditional data centers — is likely to further contribute to data center concentration.

Fortunately, local jurisdictions and utilities have a range of options available to meet and manage load growth. This includes building new generation capacity; deploying grid enhancing technologies; implementing energy efficiency, demand response, or bring-your-own-power (BYOP) requirements; requiring capital commitments; and even placing moratoriums on new data center requests. Data centers have also been proactive — shifting facility construction to new geographic markets, investing in flexibility and energy efficiency research and development, installing their own generation and storage sources, and more.

While there is an uptick in data center demand, it is just one part of the broader electricity load growth puzzle. In the past, utilities have successfully managed greater load growth than what is expected over the next decade. This time, we have an even greater variety of technologies and policies available to make it work as well as an ambitious data center industry willing to make the necessary investments.

A fork in the road: the path well-trodden poses affordability risks

The concern is not whether data centers will cause regions to run out of power, but how utilities and regulators respond to the increased electricity demand, and the impact those decisions will have on ratepayer affordability and net-zero goals.

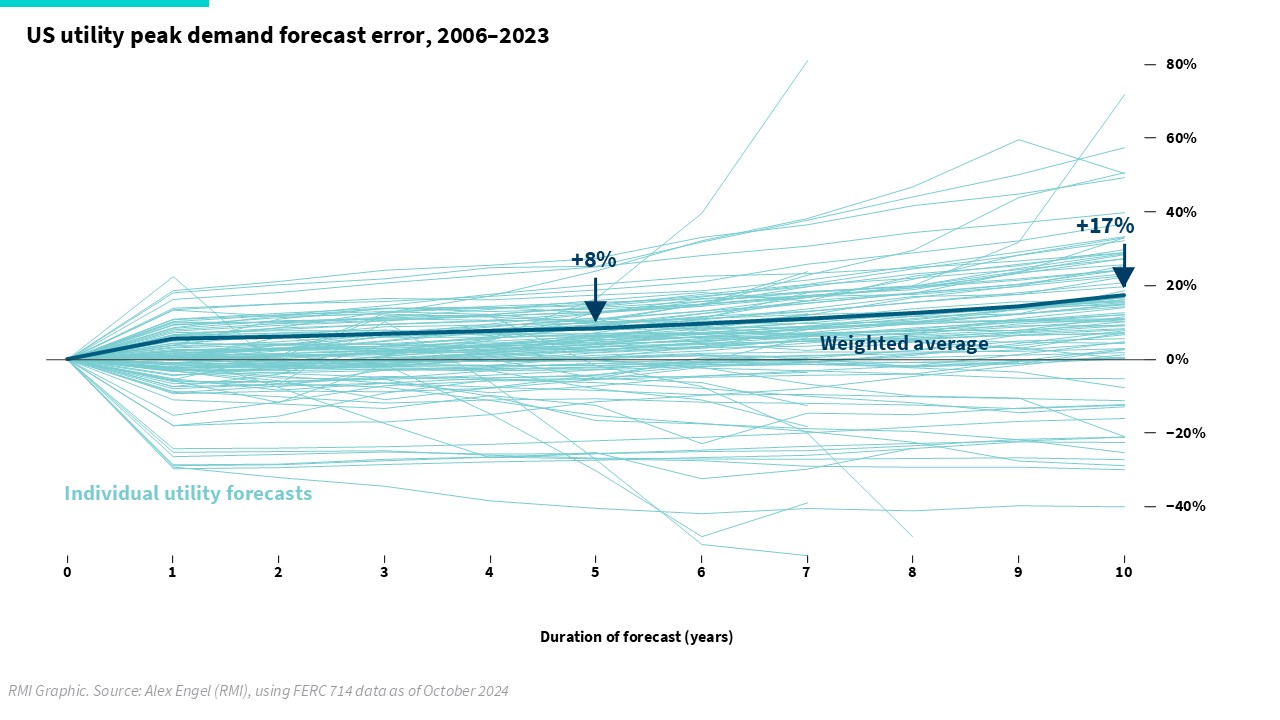

Historically, utilities have had a track record of over-forecasting: in the United States, utilities over-forecast 10-year demand growth by more than 17 percent between 2006 and 2023. Systematic over-forecasting like this means that utilities have spent billions of dollars building power plants for load that did not materialize, leaving regular ratepayers to foot the bill.

Exhibit 2

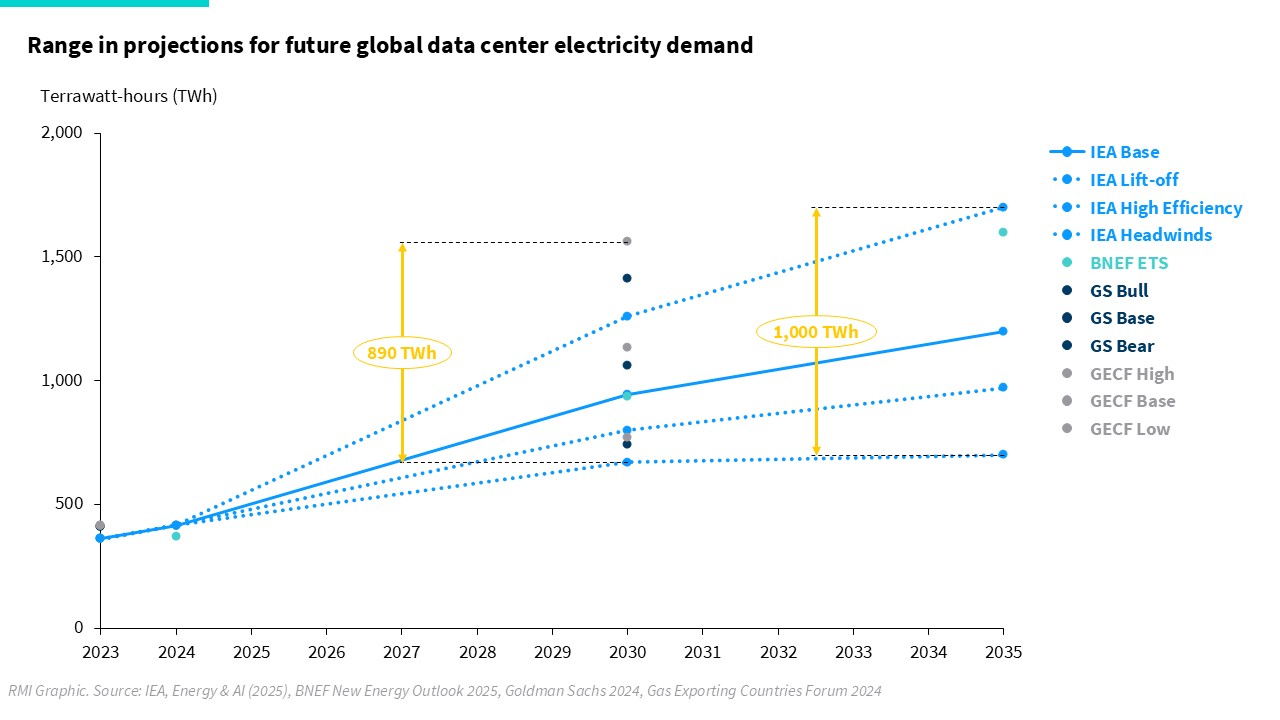

This risk will become even more salient in the following years given the high uncertainty of data center load growth. The range across forecasts is significant: according to the IEA, global data center electricity consumption could increase by anywhere from 300,000 to 1.3 million GWh in 2035. Moreover, there are significant unknowns around improvements to future hardware and software efficiency, the evolution of AI products and training practices, and the emergence of new business models. Each of these could sway overall data center energy demand. Not all proposed data centers will be fully utilized or get built: some estimate that speculative interconnection requests could be 5 to 10 times more than the actual number of data centers, as data centers “shop around” for the fastest interconnection opportunities and cancel data center projects in oversupply.

Exhibit 3

Meanwhile, there are reports of utilities across the United States starting to build new natural gas plants in preparation for this uncertain data center demand. Our analysis shows that planned gas capacity between 2021 to 2024 stayed relatively flat according to utility integrated resource plans (IRPs) but has jumped about 20 percent — by 52 GW — in the past year. This is bad news for ratepayers: if data center demand is less than expected, ratepayers may still be saddled with paying for the new plant and be exposed to fuel price volatilities.

In some regions, utilities are also turning to coal lifetime extensions, which can also lead to higher electricity rates, since coal plants become more expensive to operate as they age.

Exhibit 4

A better path forward: managing load growth responsibly with fast, low-cost solutions

A more responsible approach to managing load growth focuses on both the supply and demand side of the equation, mitigating ratepayer impact while creating benefits for data centers and utilities. The best solutions will be measures that:

- Reduce the energy data centers use in the first place.

- Enhance flexibility of when and where data centers use energy.

- Deliver energy supply fast, modularly, reliably, and at lower cost.

- Ensure the energy supply is right-sized to confirmed loads rather than speculative ones.

Combined, these solutions will help data centers scale rapidly while keeping costs as low as possible; help policymakers and utility regulators balance their priorities of attracting economic growth, ensuring energy affordability for their constituents, and generating energy abundance for all ratepayers; and help utilities minimize stranded asset risks.

Exhibit 5 shows the range of energy solutions that are being explored today in response to rising data center energy demand.

Exhibit 5

Reduce energy demand in the first place. Energy efficiency provides benefits to all stakeholders involved. For data center operators and customers, it reduces energy consumption, leading to lower energy bills. For utilities, it reduces the burden on the grid, offering an opportunity to avoid, delay, or reduce the need for costly grid infrastructure upgrades, new generators, or deferred retirements. For the private sector and investors, it provides a compelling business and investment opportunity in new and existing technologies and services that help operators avoid costs and reduce waste. Policymakers around the world are already moving to ensure this opportunity, with many jurisdictions implementing energy efficiency requirements for data centers.

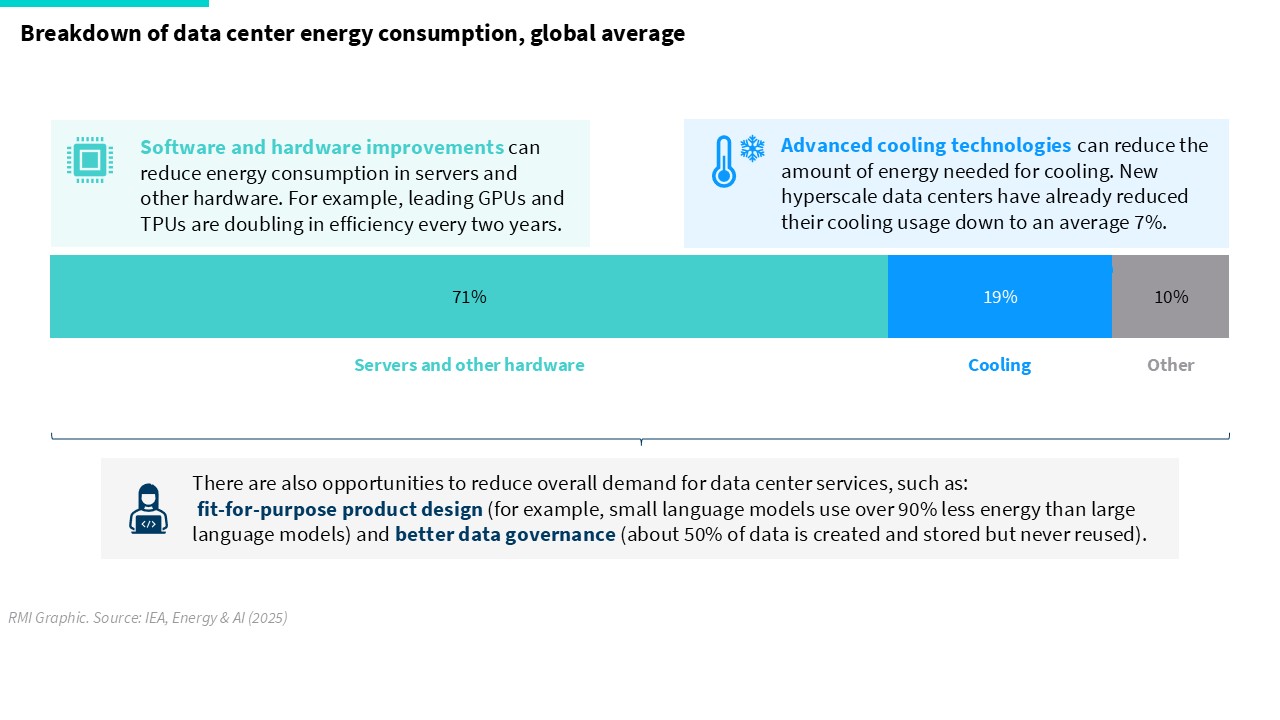

There are a wide range of efficiency opportunities that can reduce data center energy usage:

- Cooling: Cooling accounts for about 20 percent of overall data center energy consumption today. New data centers — especially hyperscale data centers — have significantly improved cooling efficiency, averaging about 7 percent, thanks to technologies such as liquid cooling and equalizers. Energy demand for cooling could also be alleviated by siting data centers in cooler regions, or by incorporating solutions that allow servers to be operated at higher temperatures.

- Hardware: The energy efficiency of computers has improved exponentially, doubling about every two years. This is a large reason why the IT sector has historically consumed less energy than expected, and it is a trend that continues to be seen with leading machine learning hardware such as GPUs and TPUs.

- Software: Computing software and underlying (AI and other) algorithms can run far more efficiently than they currently do, using less computing power and thus energy, for the same result. For example, simply grouping tasks and running them together, instead of sequentially — known as batch processing — can reduce energy consumption by as much as 50 percent.

- Product design: The development of fit-for-purpose AI products may significantly lower energy consumption. Many AI services can be fulfilled with small language models that are built to run specific tasks with fewer resources, consuming more than 90 percent less energy than the large language models widely used today.

- Data management: Over 50 percent of data is so-called “dark data” — created and stored but never re-used. Storing this data requires significant energy use. Better data management and governance policies can help reduce dark data and the associated energy consumption.

Exhibit 6

Enhance flexibility of when and where data centers use energy. Flexibility offers benefits for data centers and utilities alike. Flexible data centers may be able to connect to the grid faster and can potentially be paid for by demand response services. Demand flexibility helps utilities maintain a stable grid and avoid, delay, or reduce the need for costly grid infrastructure upgrades or new generation capacity. In the United States, if new data centers met an annual load curtailment rate of 0.5 percent — just a handful of hours each year — it could make nearly 100 GW of new load available without expanding generation. That’s roughly equivalent to 1,000 hyperscale data centers — double what is in the pipeline today.

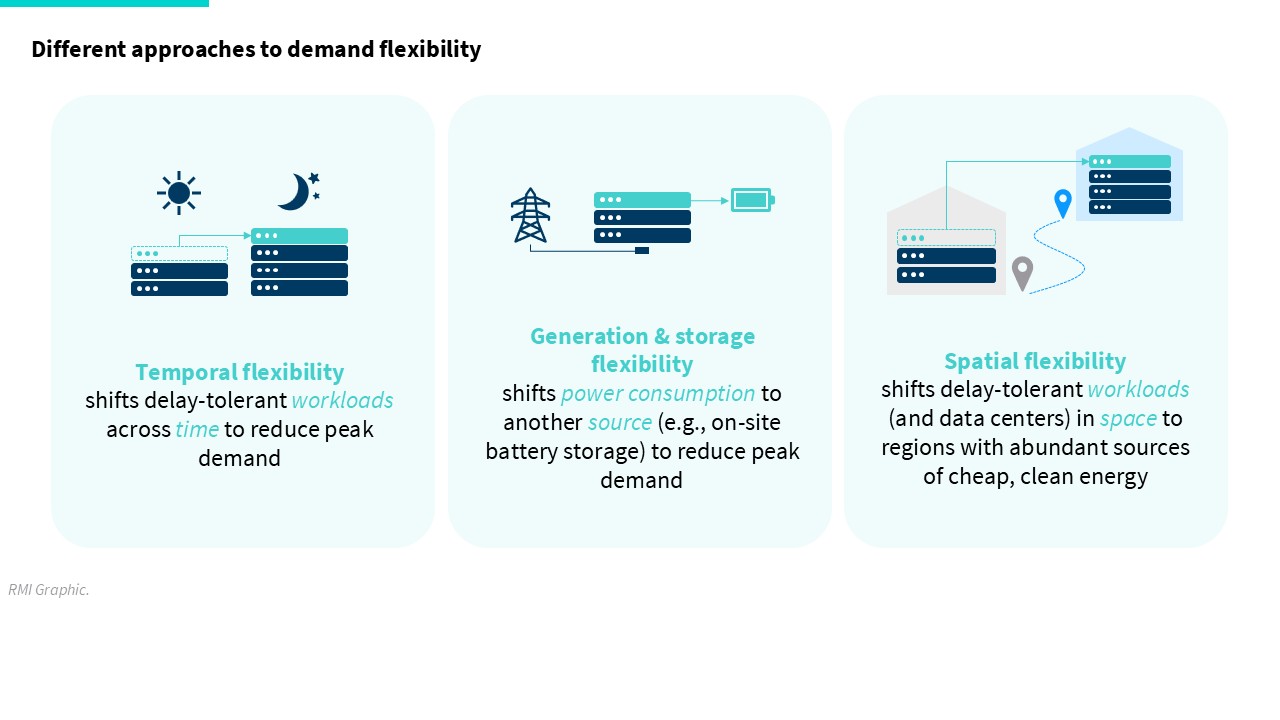

There are a variety of approaches to demand flexibility:

- Temporal flexibility (demand response): Data centers have traditionally been considered inflexible loads, but this is changing with the shift toward AI. Certain workloads such as AI training and machine learning are less time-sensitive than traditional data center workloads and can tolerate brief interruptions.

- Generation and storage flexibility: Data centers already install on-site backup generation to stem temporary disruption to electricity supplied by the grid. These are usually emergency-only diesel generators intended to operate no more than a handful of times a year for only a few hours. A move toward more flexible, on-site generation and storage options — in particular, grid-interactive battery storage — can help with peak shaving and also act as spinning reserves.

- Spatial flexibility: Delay-tolerant workloads can be moved, not just in time, but also in space. Currently, this means building some data centers farther away from key markets, where renewable energy is cheap and abundant. In the future, a more advanced approach may look like the carbon-intelligent computing platform being piloted by Google, which can shift delay-tolerant tasks to data centers in other regions in response to forecasted grid stress events or high abundance of renewable energy This requires adequate planning and scheduling and incorporating good data and clear signals of renewable energy availability to ensure that the shifted workload does not affect local grid reliability.

Exhibit 7

Increase transmission and generation capacity quickly, modularly, reliably, and at lower cost.

One of the least-regrets ways to add capacity to the grid is by adding alternative transmission technologies (ATT). These encompass grid-enhancing technologies (GETs) — such as dynamic line ratings (and advanced power flow controls — and advanced conductors. These technologies can meaningfully increase the capacity, flexibility, and efficiency of existing transmission infrastructure. ATTs can also be deployed significantly faster, sometimes in a matter of months, and at a far lower cost than traditional transmission upgrades.

In terms of new generation capacity, solar and onshore wind, combined with battery storage, is the cheapest and fastest source, with a typical project lead time of less than two years. In comparison, natural gas plants take three to four years — a timeline that is now being set back even further due to turbine shortages. Solar and wind additions to the grid also keep electricity rates low for consumers, whereas natural gas plants can expose consumers to fuel cost spikes.

Data centers typically purchase solar and wind energy through the grid via green tariffs and power purchase agreements. Many already achieve 100 percent renewable energy on an annual basis using these tools. Tariffs are being used by both utilities and data centers to manage investment and electricity rate risks from building new infrastructure, and to pursue energy efficiency, demand response, and investments in a broader portfolio of grid resources (including ATTs and renewable generation and storage).

We are also increasingly seeing data centers built directly next to new or existing generators, an arrangement known as co-location. This can offer benefits to utilities and the grid, including the opportunity to utilize curtailed solar and wind, or in the case of new solar and wind projects, reduced grid stress. Our recent report on “Power Couples” shows that pairing data centers with new renewable energy and battery storage, using an existing generator point of interconnection, could rapidly satisfy over 50 GW of new data center load in the United States, while improving affordability and maintaining grid reliability. When co-locating with existing generators, it is critical to ensure that they continue to meet their historic responsibilities to the grid so it doesn’t increase costs for other ratepayers or affect reliability.

Co-located developments are often financed by the data center operator (as opposed to the utility), which raises their capital costs, but — as evidenced by the rising popularity of the “Bring Your Own Power (BYOP)” approach — this is more than offset by the benefits in terms of speed and long-term cost reductions.

Data centers are also actively exploring nuclear and geothermal options, signing agreements with startups working on small modular reactors (SMRs) and enhanced geothermal. SMRs may lower construction times compared to traditional nuclear, which often face substantial cost and schedule overruns. Some data centers are also looking to nuclear power plant restarts, with targeted timelines of three to four years.

Geothermal energy has historically been limited to certain geographies, but next-generation geothermal can be deployed more broadly across regions. For these technologies to be commercially competitive, they will need to come down significantly in price. In the United States, utilities are working with industrial customers — including data centers — to create “clean transition tariffs” that would allow willing customers to finance advanced clean energy technology projects on the grid.

Some tech majors have indicated a willingness to power their data centers with natural gas and carbon capture. Data shows that carbon capture, use, and storage (CCUS) technologies considerably increase capital and operational costs and may also face challenges becoming an economically competitive option.

Exhibit 8

Data centers can be enthusiastic partners investing in future-ready grid solutions

As competition heats up around AI, data center operators are looking to build as fast as possible to stay ahead of the game. In their quest for speed, they are demonstrating a willingness to be flexible on priorities such as location and cost — reflected in the rise of new data center markets, the BYOP model, support for capital commitments, and investment in emerging energy technologies.

This offers a great opportunity for the future of the electricity system. In places like the United States where aging grid infrastructure is an increasing challenge, the willingness of data centers to invest in fast, flexible, and affordable electricity solutions could be a boon. It will be up to utilities, regulators, and policymakers to direct this momentum in a way that will balance economic opportunities with other responsibilities such as ratepayer affordability and renewable energy goals. When data centers are seen as a burden on the electricity system, it makes utilities more prone to hasty decisions like overbuilding new natural gas capacity. Instead, they may be better understood as an enthusiastic, capital-rich partner that can help finance desirable, no-regrets upgrades that can help the transition to a cleaner and more affordable electricity system for all.

This analysis was made possible by generous support from Galvanize Climate Solutions. To learn more about how to support RMI’s work, please click here.